Static site hosting Hugo on Amazon with S3, Route 53, SSL, http/2, and Cloudburst CDN

A forum foray into end-of-year management summaries (with ledger-cli) had a handful of people asking about my blog setup and its speed. I realized it’s changed significantly from posts three years back (and where I was using Jekyll and Gatsby), so time for an update post.

TLDR

The benefits of this setup are:

- very fast - due to static content and CDN (content delivery network)

- cheap - pennies-a-glass: it costs me ~$1 USD a month even for very large traffic amounts

- discovery - unlike other setups on managed providers like Github Pages etc, it’s SEO-friendly

- easy-to-operate - simple, easy commands to setup and execute deploys

- zero-maintenance - other than Amazon SSL cert updates (now automatic), have not touched it in nearly 3 years now

Despite the fact I was not the biggest Go fan at the time, I tried Hugo . Hugo was blazingly fast to build, has a heap of convenience features I really liked, and a nice ecosystem of plugins, along with some decent themes. Also, it compiles/comes as a single static binary to use. While Jekyll and Gatsby are both nice, both were dead slow to generate (additionally, every node update kept breaking Gatsby or its plugins when I was using it.). Hugo also takes care of your asset pipelining for you which can be a major headache with other static site generators.

Hugo was also simpler to bend to my will in some respects. It plugged cleanly (and with a simple declarative syntax) into cloud ecosystems which made deploys easy. Also, you can get the same SPA effects Gatsby proponents point to with Hugo by pre-fetching and, at least for my use cases, it made Hugo the winner on speed with a little front-end work.

YMMV, but I’ve been very happy with the results over the last couple years and it’s allowed me to focus on writing the blog, rather than fiddling with config or changing setups.

Also, bit of. a rant even though I’ve said this before: go with static sites unless you absolutely, positively need the dynamism a backend data base and servers provide. The benefits of static are Legion from speed to security to portability. It’s also amazing what you can do with a few serverless lambdas and hosted services if you do need some dynamism. If you think you need to start with Docker and/or Kubernetes, and a complex setup involving servers and a database, you are most likely doing in wrong. This is the Way.

This HOWTO assumes you are comfortable on the command line, capable of text editing yaml and markdown files, can use git for version control, can get by in Go, and have (or can open) an Amazon Web Services account. We’ll be using S3, Route 53 for DNS, Certificates Manager, and the Cloudburst CDN. At time of writing I am using hugo 0.92.

Hugo Setup

Hugo comes “batteries included” for I think what most devs and even content strategists would want to do, and this use of common patterns means that I didn’t add any plugins to Hugo whatsoever. Everything that I needed to do was in the config file for the site (which in itself was so amazing and a huge contrast to Gatsby which is heavily plugin driven).

To give you a good starter template, this is what my config looks like (YMMV):

| |

So, a few things to notice in the setup:

- It does rely on some posts and pages having metadata as YAML frontmatter to trigger or tweak page layouts or formats. 2. It also has a few configuration options which are relevant to the theme, notably the

[menu]toml configuration, and some social media icons (many Hugo themes have these). You can ignore them for the purposes of this setup.

We’ll talk about the deployment section once we get to speaking a bit about the Amazon setup. For instance, question like, why did I choose to host in AWS’ Irish datacentre?

For example, this would be a standard YAML frontmatter for an actual blog post:

| |

Page templates for pages like my About, CV, and Projects pages get handled slightly differently with the layout key-value set to “page”.

Check the code on my github to get an idea how I use different setup templates and straight up css for things like the CV, or for example, have used a datafile to autogenerate the Projects page from changing the Go templates. Note, I use a highly modified version of the Hermit theme you can find in Hugo Themes. I like the way it turned out. Also, take a boo at the scss files since some of the stuff that might seem like magic is simple css tricks combined with templates and some yaml frontmatter to have a page treated differently when it’s generated.

AWS Setup

I should really just script this at this point for people and release. Too much magic can be dangerous though, so let’s walk through doing this in AWS.

A few comments on the setup.

I always setup my main site on a subdomain (mostly because aws does not like root domains), and then have the top level domain and a www. point at it. So, I prefer something like blog.yourdomain.com, though for me personally I use [daryl.wakatara.com](http://daryl.wakatara.com) as the main subdomain and then have wakatara.com and www.wakatara.com

redirect to it since I find people used to look for those a lot (though most people just type my name in or wakatara to end up getting to the site from their browser. This handles all those situations and handles the SSL connection and serves up daryl.wakatara.com. So far, works great.

For the purposes of this walkthrough though, we’ll setup the site on the root domain awesomesauce.com, so the canonical site will be https://awesomesauce.com

and we’ll redirect from www.awesomesauce.com

to the the SSL canonical site.

There are 4 things we need to do get all this working together:

- Configure S3 to be the source of files for the static site

- Request a security certificate to enable SSL and allow http/2 to get https

- Create a Cloudfront distribution to cache content for even greater speed

- Hook everything up via DNS and Route 53

I highly recommend going through the step in order, as AWS will then pre-populate fields for you and make it so you don’t have to backtrack to reconfigure things. Note: this walkthrough assumes you have access to and can modify the DNS of your root domains.

1. S3 configuration

So, login through your browser to your AWS console and go to the S3 management section.

- Create an S3 bucket called

awesomesauce.com - In the web interface, click on the second tab called “Properties”. Go to the “static website hosting” card. Click the radio button that says, “Use this bucket to host a website”. Leave the default index and 404’s as ‘index.html’ and ‘404.html’.

You now have an endpoint, http://awesomesauce.com.s3-website-eu-west-1.amazonaws.com. Your “web site hosting” is basically handled. You can now upload an index.html file, point your browser at this URL and it would serve up the page there.

2. Requesting a security certificate

Between LetsEncrypt and Amazon’s Certificate Manager we should be thankful. Setting up security certificates by hand and configuring web servers to use them was actually a quite slow, painful in the past. It is now mostly painless. And basically, you need to have https on or your site is punished in search rankings (and some browsers will refuse to even go to it and if they do go to it issue warnings.). SSL is your friend.

So, how do we get Amazon to give us a cert?

Go to Amazon’s Certificate Manager in your amazon web console.

- In the fully qualified domain name field type

awesomesauce.com - Click “Add Another Name to this certificate” and add in

www.awesomesauce.com - Leave “Select Validation Method” as DNS validation

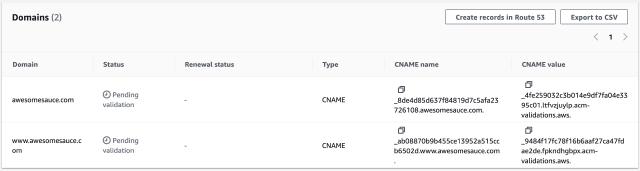

Hit the “Request Certificate” button. You’ll get a message at the top of your screen (not so obvious) that Cert fab62955-8eb3-447a-9f68-9b69b4f05d12 has not been request. Clik on it and you’ll see that your certificate is now “pending verification”.

The key thing to notice here is that Amazon is expecting you to modify the CNAME’s in the DNS you control for awesomesauce.com (quite obviously, you also need to control the domain awesomesauce.com so the main name servers will point at the resolvers for awesomesauce.com).

Here you can just hit the Create records in Route 53 and this will get added to your Route 53 configuration (which we’ll go over a bit later, especially for people who use another domain provider and DNS outside Amazon). But for now, hit that button and resolution will happen by the time we get to the Route 53 configuration (or shortly after you point your canonical domain name servers to Amazon to resolve awesomesauce.com.).

3. Caching via CDN (Content Delivery Network) - Cloudfront

This site is so fast because of its CDN. A CDN keeps a cached copy of your site as close as possible to end users so there is very little latency between request and served page. Cloudfront is Amazon’s CDN as a service. For static sites, this makes things appear very fast. It’s also cheap.

Go to Cloudfront in your Amazon aws console.

- Enter your origin domain (the S3 bucket - awesomesauce.com.s3.eu-west-1.amazonaws.com )

- Leave origin path blank

- If Name is not awesomesauce.com.s3.eu-west-1.amazonaws.com , make it so

- Make sure the radio button for “Don’t use OAI (bucket must allow public access)” is selected.

- No custom header is required

- Leave Origin Shield at No (unnecessary with a static site)

Additional settings can be left at default except for…

Setting up SSL for Cloudfront

In the Settings section there is a dropdown in Custom SSL Certificate - optional

If you have created your SSL certificate in the US-East region as we described above (you have to for reasons AWS does not share and I have not quite fathomed), it should appear under the dropdown list under ACM. Choose awesomesauce.com .

That’s it Hit the Create Distribution button and you’re good to go.

4. URL with a domain you want - Route 53

If you’re using Amazon and Route53 as your domain provider, you’re practically good to go. You just need to point the DNS at Cloudfront rather than the S3 bucket’s URL and you’re good to go. I am assuming if you’ve gotten this far you can get into Route 53 and do that (since there’s a drop down for it.).

Personally, I don’t use Route 53 for my DNS needs.

I’m a big fan of dnsimple . We’ll go through this path - that of delegating the specific site to Amazon from another DNS, since it’s the harder one.

So, the trick here is to delegate to Amazon’s nameservers for the domain name you want on your core nameserver and domain hosting.

If you’ve done the above with security certificates, your Route 53 Zones for [awesomesauce.com](http://awesomesauce.com) should have already been created. In case you haven’t though, let’s go through the process of creating them from scratch.

- Go to the Route 53 service in your Amazon console.

- Under the DNS Management in your dashboard, click on “Hosted Zones” or if you don’t have any click “Create Hosted Zone”.

- Once in “Hosted Zones” hit “Create Hosted Zone”,

- Type awesomesauce.com in the domain name box (make sure Type is remains “Public hosted Zone”

- Click “Create” at bottom.

Amazon sends you to the “Record Set” screen, but it’s created a unique identifier for your zone (generally a long alphanumeric string starting with “Z”) and also kindly created 4 NS Nameserver records which tell the interwebs where to look up IP addresses when they are trying to find out about the domain awesomesauce.com and an SOA (“State of Authority”) record.

The first thing you want to do if you want to support subdomains is hit the “Create Record Set” button. This should bring up a “sidebar screen” to the right of the screen. In the name, type in the subdomain you used for the bucket of your S3 (so, say www.awesomesauce.com). Leave the Type as an “A - IPv4 record” which should be the default.

Now, here’s a slightly tricky bit: You want Route 53 to know it should use Cloudfront, your CDN to serve pages unless Cloudfront does not have a copy of the page it needs (or it’s expired.)

Click the “Alias” radio button to Yes. This should bring up an “Alias Target” field. In it, you should see under an item “— Cloudfront distributions —” menu item, the distribution id you set up in the previous section. It’ll look something like d6cf0rol76d6uf.cloudfront.net. If not, take whatever your distribution id was from your Cloudfront screen we saw above and type it in there.

Set “Routing Policy” to “Simple” and leave the last option “Evaluate target health” as No (since Route 53 cannot evaluate the health of a Cloudfront distribution.).

Route 53 should now be setup. If you use Amazon as your main DNS and nameserver provider, you’re good to go.

If not, you need to set the nameservers for this domain in your main DNS provider to the Amazon nameservers to have this now work (this is slightly beyond the scope of the article, since it depends on your provider, but we’ll outline the basics here.).

Setting up Your non-Amazon Domain Provider

For your domain provider, go to their DNS screen for the domain awesomesauce.com. What you want to do is create 4 NS (Namespace) records for the domain. These tell the internet where to look for information on the addresses for your domain.

In the NS value fields, use the values from Amazon’s Route 53 for their Nameservers. This is effectivelyt telling your domain provider, to redirect or delegate the name service to Amazon’s.

Setup Finish

That’s it. It can take a while for DNS to propagate depending on your settings, but basically, you’ve set up everything you need now to have a cheap, fast, and secure system that is basically maintenance-free and nearly immune to being fireballed by traffic.

Automating Deployments

As I mentioned, one of the things I loved about Hugo was the fact they baked deployments directly into the program. This has made things

If you want to look at how your system will look before deploying. Run hugo server in the root directory of your blog (I do this with either VS Code’s handy built in terminal or Emac’s mode. Check to make sure your post looks the way you want (always surprising how many mistakes you can make when you’re doing good edit and proofread).

When you’re ready to deploy, I use the following commands to both build, deploy, and invalidate the cache on Cloudfront at the same time.

| |

hugo of course builds the site (which is ridiculously fast compared to every other static site software I used.)

The hugo deploy with switches deletes any files necessary from S3 to be able to get S3 into a reflection of what’s just been built. And of course the CDN switch invalidates the cache on Cloudfront so that it can repopulate with any changed or new content.

And that’s it!

There are a few extra things you can do here if you want to get fancier.

For example, I have a small node program I use on the end of this as a further command to notify search engines I’ve updated content and have them re-index the site (Google and Bing though it also used to hit up Ping-O-Matic. Should probably update it for DuckDuckGo and a few other notable ones.). This is great for SEO though Hugo is very SEO friendly.

Even one step further, since I push the code up to github for every commit before I deploy, a little extra elbow grease, and you could craft a GitHub Action to take care of all the deployment step for you so you remove the need to bash out the command line each time (and especially if you wanted to run some sort of CI/CD process on this like doing broken link checking or similar… which now I’ve said it I should probably due in a future iteration of this post. 😅 It’s incredible how much link rot happens on a blog with 16 years of content. 😳).

Fin

And that’s it! You should now have a durable, near maintenance-free setup which gives you an inexpensive, super-fast, and easily deployable static site which will be the envy of geeks everywhere. Now it’s up to you to put some great and compelling content there for the people who would actually read it. Go forth!

So, I hope this HOWTO helped you out and you’re now sitting proudly with your hosted static site and a setup that mirrors mine. Feel free to mention me on Mastodon @awws to let me know if you are. Always curious to hear more about people’s experiences having read this and even how I might improve the post or what may be even better (or wrong). Feel free to ping me at @awws on mastodon or via email hola@wakatara.com .